Last week, the Industrial Internet Consortium -- a group of more than 150 companies working on a common path toward ubiquitous, standards-based machine-to-machine communication -- launched an ambitious project aimed at bringing real-time data analytics and control to the world of microgrids, and potentially, the grid at large.

Building on work being done by Duke Energy and its “Coalition of the Willing” vendor partners, the project, officially titled the Communication and Control Testbed for Microgrid Applications, is seeking to test a common set of technology standards and data models for distributed energy devices and computing platforms.

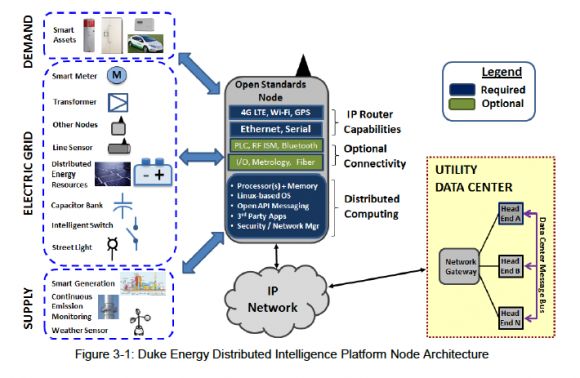

The end goal is to allow smart solar PV inverters, energy storage systems, plug-in electric vehicles, responsive building energy loads, and other grid-edge systems to work in real time with the utility smart grid platforms they’re connected to -- not through complicated, project-by-project integration, but in a more “plug-and-play” fashion.

That’s a tough challenge, as we’ve noted in our ongoing coverage of efforts on this front. To date, the world’s microgrids have been built with components from different vendors, using different technology implementations, which makes integrating them a complex and time-consuming task, not easily replicable from one project to the next.

While microgrids are being designed and built by grid giants like Siemens, General Electric, ABB, S&C Electric, Schneider Electric and Toshiba, power system specialists like Power Analytics and Spirae, and even third-party solar companies like SolarCity, they’re not necessarily being put together in ways that can accommodate innovation and participation by third parties.

But this new project, which includes IIC members Real-Time Innovations, National Instruments and Cisco, utilities Duke Energy, Southern California Edison and San Antonio, Texas’ municipal utility CPS Energy, and the Smart Grid Interoperability Panel (SGIP) industry organization on board, is one of the most comprehensive efforts yet to bring standards to this nascent industry.

It also has an interesting set of underlying technologies to build on. The first tool is the secured messaging protocol Data Distribution Service (DDS), originally developed for the U.S. Navy to connect shipboard IT systems in a real-time, peer-to-peer network, which is now in use to operate Siemens Wind Power turbines and big U.S. hydropower projects. Real-Time Innovations is providing its open implementation of DDS for this project, and National Instruments and Cisco are providing the networking and computing platforms to carry it into the field.

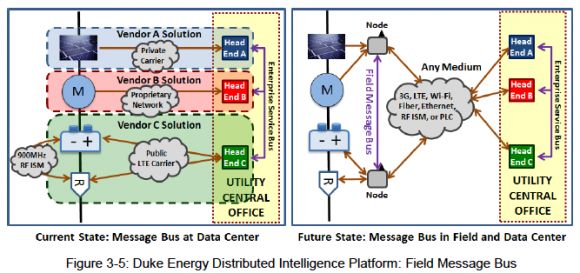

The second is the Open Field Message Bus (OpenFMB) distributed intelligence reference architecture, published by Duke earlier this year (PDF) to show other utilities and vendors how it and its partners are connecting disparate devices in ways that allow them to communicate and react to rapidly changing conditions on the grid. That’s led SGIP to launch a working group to codify the data models, service requirements and standards for OpenFMB, so that they can be replicated by other industry players.

So how will these technology tools be put together in ways that could lead to real-world implementations? Brett Murphy, director of business development for RTI’s Industrial Internet of Things business group, explained how the partners plan to move from proof of concept to real-world implementation over the next few years.

From prototype to field deployment to an industry-wide standard?

Phase one of the project will take place over the next few months, Murphy said. “We will pull together our software, Cisco’s routers, and National Instrument’s grid automation system, CompactRIO, and put together a proof of concept” in National Instruments' Austin, Texas lab, integrating several power outlet nodes, a grid relay device, and a solar PV array, he said.

“Phase two is, let’s get a little bit more real -- but let’s not shut off somebody’s power, or blow something up in the field,” he said. That’s where utility partner Southern California Edison comes in. In early March, “we met up with Andy Paylan of Southern California Edison. He runs their smart grid lab -- and it’s a very cool lab,” combining high-tech power systems simulation software with lab space to connect solar inverters, plug-in EV chargers and distribution grid equipment.

Paylan, lead engineer for SCE’s Advanced Technology group, described his lab’s role as “testing many, many platforms, and many, many control algorithms. The premise is that as you’re close to or at the edge of the distribution system, you can respond very quickly to solar PV intermittency,” and other potentially grid-destabilizing effects that can arise with the growth of distributed energy resources.

“This whole concept of a field message bus with low latency and interoperability is yet another platform for us to be able to test before any deployments in the field,” he said. Bringing it to that level of readiness will require other work, such as the DDS security protocols that RTI is working on, as well as the device-level security that it’s seeking help with from potential partners like Intel, Murphy noted.

Phase three of the project is when the real-world testing will take place, and CPS Energy has already picked out the sites. “Our first key project is a microgrid we’re looking to build at a local facility,” Raiford Smith, the former chief of Duke emerging technologies group who joined CPS as vice president of corporate development and planning last year, said in an interview this week. Featuring a solar PV system, a 75-kilowatt lithium-ion battery and utility grid devices, “that’s a small-scale implementation of these interoperability capabilities,” and it is set for completion in the next 18 months, he said.

After that, “we will take it to the Grid of the Future,” a CPS project centered on a substation serving 15 circuits and about 30,000 customers, he said. The site also has 5.5 megawatts of utility-scale solar, about 70 different rooftop PV systems, several plug-in EV chargers, and real-time demand response capabilities through Consert, the startup bought by Toshiba’s Landis+Gyr in 2013.

That project has a three-year rollout schedule, which likely ties in with the length of time it will take for the IIC coalition and SGIP to work out the challenges of bringing Duke’s OpenFMB reference architecture to the level of development that can be taken to standards bodies like NIST for codification. In the meantime, industry groups like EPRI, federal labs like NREL and utilities in the U.S. and Canada are also getting involved, he said: “We’re starting to see that critical mass building.”

Why interoperability matters across devices and data models

There are lots of reasons why the utility industry at large, and the companies working on bringing distributed energy resources to bear at grid scale, might want this kind of work to move forward, Murphy said.

“The most important element is the ability to do...edge-to-edge communications and control,” he said. Today’s centrally controlled grid management platforms, built on the premise of 15-minute intervals to call on generation and grid resources, can’t react quickly enough to mitigate the fast-changing conditions that can come across distribution grids when clouds pass over a bank of rooftop solar panels, or when lots of homeowners plug in their electric vehicles at the same time, he explained.

“There’s not time to go back to the substation, and certainly not to the central control, when a cloud goes over, or the wind suddenly dies,” he said. “You at least have to turn on a battery backup or local power backup to respond to that event, within milliseconds ideally. That’s one of the key pieces.”

“The other idea is to put in a lot more intelligence and analytics on these compute nodes that are down near the edge,” he said. “You might hear Cisco talk about fog computing -- well, that’s their concept for handling this. They can take huge amounts of data, and boil it down, and then send the important data back to the cloud.” That allows whatever these distributed, coordinated end nodes are doing in the field to be monitored and managed by the utilities or grid operators, since they’re responsible for making sure they’re playing a positive role in keeping the electricity system secure, reliable and efficiently running.

SCE’s Paylan sees potential for this kind of distributed, yet centrally manageable, architecture helping utilities move past the point of merely mitigating the impacts of distributed energy resources (DERs), and turning them into beneficial actors. “What we’re trying to do is find out how to embrace DERs,” he said. “That’s probably a bit of a shift,” but it’s one that utilities in California, Hawaii, New York and other states are being asked to make as regulators start to require them to integrate the potential grid-supporting value of customer-owned and operated DER into their grid operations and investment plans.

Take the example of smart inverters, a term that could simply mean solar PV inverters that are preset to avoid grid-disruptive behavior, or which could expand to include functions that can proactively take steps to support the grid in ways that utility-owned assets do today. “You’re not just setting set-points so that you don't have to worry about it,” he said. “Really, this is saying, it’s got a lot of capabilities -- let’s see how we can do more than that -- not just to behave nicely on the circuit, but the play a role in the optimization.”

This puts pressure on any distributed device control system to also incorporate distributed data analysis in support of these tasks, he noted. “Having any device be just another resource with data, so that the entire platform can make use of this new resource, is definitely attractive,” he said. “Is it doable? There are challenges on both sides, on extending the existing data lines,” between device networks and utility distribution management systems and distributed energy resource management systems, “and creating new models so that new devices can be deployed.”

Open standards also open up this field to companies that may have good ideas and good technology, but lack the capital and expertise to do one-off integrations with custom-built microgrids, Murphy noted.

“You could have a small company that says, ‘We have a really innovative way to do this particular kind of analytics for a microgrid concept, and we just need to be able to run this thing on Linux. We know what the data model is, so we can just offer this.’ And they can go to a couple dozen system integrators, who may respond, ‘That’s really cool -- we want some of that.’ That’s what this can enable.”

Smith used an analogy from the telecommunications industry to explain how this could unlock the grid edge. “I think of this as the beginning of the mobile telecom revolution,” he said. “Everyone started out building their own proprietary things, and eventually the carriers said, 'This isn’t going to work.' They started working toward interoperability and lab verification,” and the results have been operating systems like iOS and Android, and a universe of apps developers putting them to use in ways the industry couldn’t have imagined.

“We are at that beginning stage,” he said. “We’re just getting the momentum and the technical wherewithal to do it. But I fully [expect] that as we start to see success -- and we’re seeing that already -- the industry will start to move along these same lines.”