Back in July, Southern California Edison estimated it would need to invest up to $1 billion over the next half-decade to incorporate rooftop solar, behind-the-meter batteries, plug-in electric vehicles, and other distributed energy resources into its distribution grids. Of that, $250 million to $575 million was earmarked for “technology platforms and applications,” -- in other words, cloud computing, software and apps.

But SCE is not going to be spending money on any of the traditional building-block software platforms commonly used in the utility industry.

Instead, it wants a grid management system, or GMS -- a “system of systems” that can do things at the edge of its distribution network that previous generations of technology, with their siloed data sets, proprietary protocols and central communications-and-control schemes, just can’t handle.

“What this whole presentation is about is trying to help people understand what it’s going to take to move our more centralized control architecture to a more distributed one,” Jeff Gooding, SCE’s principal manager of advanced technology, told a jam-packed conference room Monday at the DistribuTech show in Orlando. “We shouldn't underestimate how difficult that will be.”

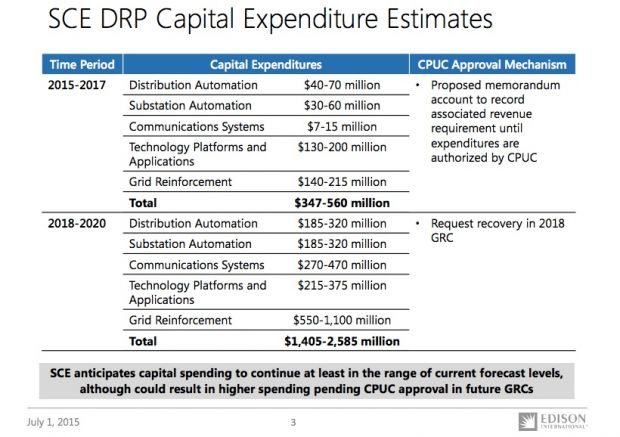

It won’t be cheap, either. As part of its July distribution resources plan (DRP) filing with the California Public Utilities Commission, SCE laid out its capital expenditure estimates on what it will cost over the next five years to prepare its grid for the onslaught of distributed energy resources -- between $1.4 billion and $2.5 billion.

About half of that funding -- which is still just a chart in a filing, rather than a full proposal, to be clear -- is aimed at replacing older and smaller substations with more robust modern versions. Substation and distribution automation make up about a quarter more of the projected costs.

But $245 million to $575 million is slated for “technology platforms and applications” -- about $130 million to $200 million for 2015 to 2017 under a memorandum account, and the rest in its next general rate-case proceeding for years 2018 to 2020. In a post-panel interview, Gooding identified this line item as the likely funding source for SCE’s grid management system work.

In Monday’s presentation, Gooding noted that SCE’s plan, while expensive, should be much cheaper than handling these new, disruptive grid forces the old-fashioned way. “If we just physically hardened our grid and reconductored everything, that would cost billions of dollars,” he said. “We think we can drive the same system we have now” without that cost.

But to keep up with this rate of change, SCE will be demanding a new level of standardization amongst the hardware, communications and software products and services that will be going into the GMS, he said. “There are certain requirements the vendor community really needs to pay attention to, to allow them to participate in this larger system of systems,” he said.

That will likely include distributed intelligence standards-in-development, such as the Open Field Message Bus (OpenFMB) protocol that’s been spearheaded by Duke Energy, and is now being worked on by the Smart Grid Interoperability Panel, he said.

But it will also involve some heavy software lifting from the companies like General Electric and Alstom, Siemens and ABB that provide advanced distribution management systems (ADMS), Gooding noted. “We’re not saying the ADMS goes away completely -- elements of it still exist,” he said. But “current ADMS products need to evolve to accommodate that logic all the way out on the edge.

Breaking down the grid management system

SCE is arguably one of the country’s most experienced utilities when it comes to bringing novel forms of distributed energy into its grid. In late 2014, it awarded more than 200 megawatts' worth of contracts of behind-the-meter batteries, thermal energy storage, energy efficiency and demand response as part of a groundbreaking local capacity resource (LCR) procurement.

But SCE and its fellow investor-owned utilities in California also face the task of incorporating distributed, customer-owned energy resources into their multibillion-dollar distribution grid plans, under the CPUC’s DRP and Integrated Distributed Energy Resources (IDER) proceedings. They’re also facing a state mandate to deploy energy storage at the hundreds-of-megawatts scale, big rollouts of electric-vehicle charging infrastructure, and new regulations for how smart solar inverters can be integrated into utility grid operations, to name a few of the grid-edge regulatory initiatives underway in the state.

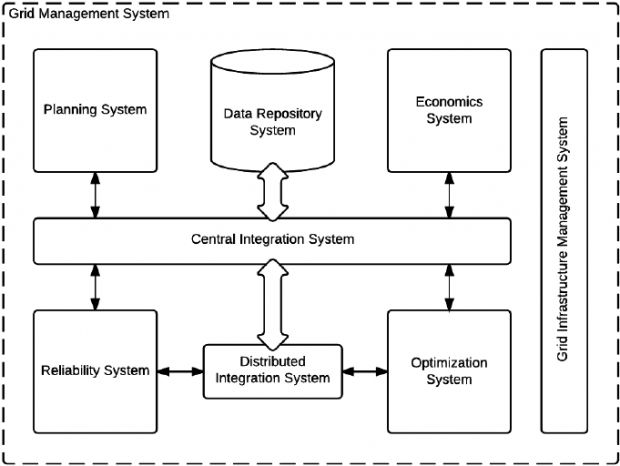

Today’s utility software systems -- including outage management systems (OMS), distribution management systems (DMS), energy management systems (EMS), and geographic information systems (GIS) -- “have served us well, and they’re interacting with different parts of the grid,” John Bubb, SCE’s principal advisor on IT architecture and system design, said in Monday’s presentation. But none do everything the utility thinks it will need to do in a DER-rich future.

The GMS will augment, or in some cases replace, these traditional software tools with modern IT, he said. “We need well-defined interfaces to promote interoperability across the system,” he said. “We also need a ‘single source of truth’ that is much more dynamically updated -- a much more real-time kind of thing.”

Those include service-based architectures, an integrated set of operational functions, and critically, support for distributed intelligence at the edge of the network, he said. “At the very edge of the network, it needs to operate autonomously, based on local conditions,” he said. Simply put, many of the grid-DER interactions that will be going on in this future grid will be happening too fast for central control schemes to react in time.

Out of this mix of grid-edge intelligence, Bubb said, “We think there are pieces the utility owns,” like the core communications networks and critical distribution grid assets. But for the millions of rooftop solar systems, backup batteries and smart thermostats coming into the mix, “We absolutely expect to be using...third-party networks, public networks, and the internet, because our solution cannot be this gold-plated, perfect solution. We’ve got to have solutions that fit the right economics for the smaller DGs.”

SCE released supporting system architecture (PDF) and concept of operations (PDF) documents on Monday, along with plenty of technical diagrams and a list of 132 separate business requirements, such as “ability to accommodate human-operator action and manual intervention as necessary” or “provide a means for correlation between reliability and market use.”

Looking past all the dry technical details, however, the fundamental goal of the GMS is “a design philosophy that supports the active operation of the distribution grid to support high levels of distributed generation (e.g., more than 50 percent of total energy consumed), large amounts of customer-owned storage, and high levels of demand response.”

A roadmap to distributed, yet integrated, grid intelligence

SCE is already working on some of these challenges, Gooding noted. For example, SCE will be testing OpenFMB-enabled equipment and software platforms in its smart grid lab. That’s part of a broader project led by the Industrial Internet Consortium, a group of more than 150 companies working on a common path toward ubiquitous, standards-based machine-to-machine communication.

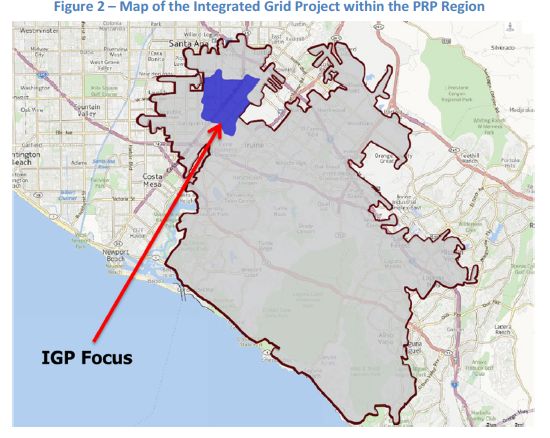

It’s also prepping its first big real-world test of distributed energy and intelligence in its Integrated Resources Pilot (IRP) project in grid-stressed Orange County. “Within this area, we’ve targeted a specific substation that has high levels of penetration of distributed energy resources, and we’re deploying a lot of those control applications there,” said Brenden Russell, SCE’s senior manager of power systems controls.

“We see susbstations evolving into places on the grid that can support data-center-like capabilities, where we can deploy distributed control systems to manage parts of the grid,” he said, adding, “Once we IP-connect all these devices, it opens up a ton of problems we need to manage around security.”

Of course, “centralized control of the grid is not going to go away,” he said. “What’s going to change is that we’re going to have a lot more devices than what we have today. We have to push out macro-controls to groups of devices, or individually. […] The central algorithms are still relevant. But it’s more a question of scalability.”

To test the technology behind these concepts, “We’re currently running a proof of concept where we can start deploying applications out to edge devices, and get devices to talk to each other without sending back to the central control system,” he said. “It really takes [human oversight] out of the loop.”

But pulling these disparate R&D and pilot activities into an overarching software framework will take some time, Gooding said. The next few years will involve new monitoring equipment, data analysis, and testing of more advanced, distributed-intelligence-capable technologies on the grid, he said. For a full rollout, “From the time we say ‘go,’ we see five to six years before we see a lot of these capabilities.”

That’s fast for a utility adding a whole new layer of grid operations technology and information technology. But it’s just keeping pace with the changing mix of energy technology showing up at the homes, businesses and facilities of its customers, Caroline Choi, SCE’s vice president of energy and environmental policy, noted in Monday’s meeting.

“The future is going to be more and more distributed, and our customers are forging that path with the choices they’re making, whether with solar rooftops, or electric cars, or batteries,” she said.

Bubb added that SCE wants to share its GMS experience with other utilities. "Many of these capabilities are going to be brand-new to utilities. We’re looking for other like-minded utilities that feel they’re facing these challenges in about the same timeframe we are -- we think we can build some positive momentum."