Are demand-response providers shortchanging California utilities and customers by claiming payments for services they can’t deliver, leaving the state subject to future grid instability?

Or are the state’s market rules and regulations shortchanging demand-response companies and customers, undermining the most cost-effective tool for future grid reliability?

This dispute between state energy regulators and the demand-response industry has become a flashpoint in California’s efforts to prevent a repeat of the grid emergencies and rolling blackouts of August 2020 in the coming summer.

And it’s not clear that the data available can definitively prove which point of view is the correct one — unless, perhaps, more sophisticated methods can provide an answer.

That’s because gauging the performance of demand response inherently involves measuring a negative value — how much electricity homes, businesses, factories, water pumping stations and other loads don’t consume, compared to what they could have been expected to consume, over a given period of time.

To fairly compensate the value of these “negawatts,” while also preventing participants from being paid for load reductions that don’t actually occur, regulators have devised a bewildering array of load impact protocols, baselining methodologies and other approaches designed to encourage good-faith activity and prevent market-gaming.

They’ve also created economic structures — capacity payments, or in the parlance of California’s regulatory regime, resource adequacy (RA) contracts — that prepay companies to invest in the technology and operational infrastructure to allow them and their customers to deliver those negawatts at a future date. Then, when those negawatts are actually needed, additional market rules kick into play with the goal of rewarding those that perform and penalizing those that don’t.

Back in 2015, the California Public Utilities Commission launched a major revamp of its demand-response regime designed to shift it from utility-run programs to multiparty market participation based on these principles. The goal was to invigorate a sector that provided less than 5 percent of system peak demand reduction and capture private-sector investment in a low-cost and carbon-free grid-balancing resources.

But since then, total demand response capacity in California has actually shrunk from about 2,000 megawatts to about 1,600 megawatts, representing 3.5% of total RA obligations. Utilities have seen programs shrink, and about $58 million in demand-response budgets have gone unspent over the past two years.

At the same time, the new “economic” demand response model has not picked up the slack. The state’s centerpiece market-enabling program, the Demand Response Auction Mechanism (DRAM), had its budget cut from $27 million to $14 million in 2019, slashing about 150 megawatts of demand response capacity from the grid, according to DR providers.

All the while, the CPUC and state grid operator CAISO have shown increasing skepticism of demand response’s ability to reliably serve a role in balancing the state’s increasingly renewable-powered grid — a skepticism that has sharpened even more in regulators’ latest report on the August blackouts.

The negative view of demand response during the August blackouts

The final root-cause analysis report from CPUC, CAISO and the California Energy Commission provides detailed data on the performance of more than 1,600 megawatts of resource over the days of August 14 and 15. That includes about 1,100 MW of reliability demand response resource, which is triggered when CAISO market prices reach close to CAISO’s bidding cap of $1,000 per megawatt-hour. It also includes another 358 MW of utility-managed and 243 megawatts of non-utility-managed proxy demand response (PDR), which bids into markets at prices set by utility programs or the aggregators’ choosing.

According to the report, this collection of market-dispatched demand response failed to provide the level of load drop that their RA values indicate they could have made available. The divergence between the market awards and metered load drop diverged significantly: from 81 percent for the reliability demand response resources to as low as 41 percent for the non-utility PDR resources.

The recommendations that emerged did not evince a charitable view of the reason for this divergence.

First, while “a part of this divergence in the real-time markets is due to some demand response resources not being capable of responding to real-time conditions, most of this divergence may be due to bidding practices of PDR providers that reduce the likelihood of the associated demand response resources being selected in the day-ahead market, even on days with extremely high day-ahead demand forecasts,” the report states.

While the report doesn’t specify what this means, a 2019 report from the CPUC Energy Division staff notes that there is “emerging evidence that a growing amount of third-party proxy-demand response resources are similarly bidding at levels that ensure the resource is not dispatched in the market.” In other words, regulators are questioning whether market participants are using very high bids in an attempt to avoid having to prove that the capacity they’ve been awarded RA contracts for can in fact be delivered.

The next recommendation from the final root-cause analysis report takes this line of inquiry even further, noting that the “observed divergence between awarded [megawatts] and delivered [megawatts] (load drop) requires further study and remedy. The divergence is particularly large for [non-utility] PDR and suggests that a significant portion of [non-utility] demand response providers may not be accurately estimating available capacity.”

This statement could be interpreted as questioning whether DR aggregators are making errors in estimating their available capacity. It could also be seen as suggesting that they are knowingly seeking out RA contracts for load drop they cannot deliver.

"Arcane policies and outdated rules" under the microscope

This intimation of inaccurate or improper management has been met with an increasing level of frustration from DR providers in California. That’s because these groups have been arguing for years that the root of California’s problems with the resource lies not in how they’re being managed but how they’re being measured.

The DR Coalition, a group including Google (Nest), Leap, NRG Energy, OhmConnect, Oracle (Opower), Tesla and Voltus, stated in its December CPUC filing that “a number of arcane policies and outdated rules adopted by the commission and implemented by the [investor-owned utilities] have led to lackluster participation in the state’s DR and [energy efficiency] programs over the past few years.”

These problems compounded during the August heat wave, when their customers participated “with great enthusiasm and responsiveness despite being curtailed for up to five consecutive days, for up to five hours per event, which exceeded the minimum requirement of three consecutive dispatches of four hours for RA resources. For many DR customers operating businesses or with children at home, this was a significant sacrifice.”

“Unfortunately, most of these customers were not fully compensated for their performance,” the group states in the filing — a result, they said, of “rules governing the measurement of performance and their applicability to their monthly capacity payment.”

The key problem lies in the CPUC's and CAISO’s application of a set of baselines for how much power they can be expected to use normally to measure how much load they drop during events. The rules for setting and interpreting performance under these baselines are laid out in Load Impact Protocols, created by the CPUC in 2008, which “look at historic performance and temperature sensitivity to forecast load drop over the availability assessment hours.”

The most prevalent method is known as a “10 in 10” baseline, which averages customer load from the previous 10 days to set the level to calculate load drops on event days. But even with additional “day-of adjustments” built in to correct for the fact that grid emergencies are almost always called on days that are much hotter than the days that came before, this baselining methodology ends up indicating that customers aren’t dropping load at all.

That’s what happened to OhmConnect customers during the August heat wave, CEO Cisco DeVries argued in a Twitter thread this month. “We paid @ohmconnect customers to successfully reduce energy use by 200 megawatt hours! Great news! Except CA’s rules meant we were penalized.”

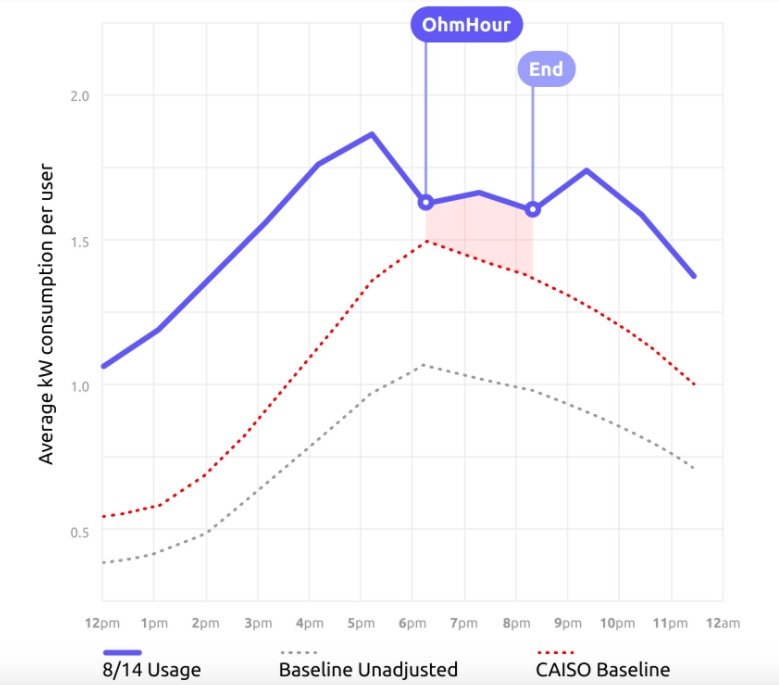

The data laid out in a recent OhmConnect blog post highlights the gap between its customers and the baselines set for them.

Not only did the company receive no credit for the load reductions it did achieve, “we actually had to buy power on the spot market,” DeVries wrote. “This is just one of the many ways [California's] energy market fails to support, encourage, or compensate demand response."

This state of affairs has harmed demand response in the past, and it could also undermine its potential to aid in future grid emergencies, the DR Coalition states in its December filing. At present, the CPUC’s efforts to create emergency measures to prepare for summer 2021 haven’t included expanding the DRAM program or creating new pathways for demand response to participate, according to the document.

Nor has it taken up the disconnects between baselining methods and performance during heat waves, the companies contend. This “will significantly dampen enthusiasm for participating” in 2021 and beyond “unless measures are taken to ensure that they will be appropriately compensated should a similar heat event occur again.”

Demand response: An imperfect but vital resource

It’s difficult to see how the distrust between California regulators and the demand-response industry can be resolved, given the reliance on methods that attempt to measure load drop in the same playing field as power plants or batteries.

“I think that our biggest obstacle, to be honest, is having the perfect be the enemy of the good,” Jennifer Chamberlin, executive director of market development for demand response provider CPower, said in a December webinar on demand response. “There is not a perfect resource out there.”

“We don’t want to compensate customers for doing something they didn’t do,” she said. But as the disputes over performance during the August heat wave point out, “we’re very ingrained from a policy perspective to making sure everything is perfect.”

David Meyers, CEO of Polaris Energy Services, the biggest agricultural pumping demand-response provider in the state, agreed that part of the problem lies in attempting to measure the inherently unpredictable value of customer load reduction as if it can be precisely matched to power plants, batteries or other dispatchable and metered generation.

As an industry, “we told the utility world [that] we can do with demand exactly what you do with generators,” he said. “This whole thing of bidding into the market — the proxy demand response and reliability demand response — it’s all tied to this resource adequacy construct and to the baselines. And it’s very difficult. Nobody I know is playing games with this; we’re all trying to do it right. But you’re making your best estimates.”

Meyers pointed out that the voluntary conservation efforts that helped prevent more grid emergencies after the August rolling blackouts, which were lauded by state regulators, may well have been judged differently if they had been subjected to the same rules that demand-response providers face.

The 22 megawatts of load reduction from U.S. Navy ships in the days following Aug. 14 and 15, for example, could “never be in a DR program because you don’t know in advance when the ships will be there,” he said. “If they were, their nominations would be severely derated because of the difficulty in forecasting their load.”

And if the ships hadn’t been in harbor and using power from shore-based grid connections for several days before they were called on to reduce their load, baselining methods may well have calculated that they had actually added to grid stress, rather than reduced it, he pointed out.

But without some agreement on how to measure demand response in a way that both holds providers to account and compensates good-faith participation, California may be missing out on what reams of research show will be an integral part of the resource mix the state needs to manage its increasingly renewable-powered grid.

A long-running research project by Lawrence Berkeley National Laboratory finds that shifting electrical loads to match solar power’s ups and downs could replace gigawatts of batteries that would otherwise be required to meet this evening peak — particularly as California shifts transportation and heating from fossil fuels to electricity.

But as LBNL’s most recent report notes, this will require a whole new set of “codes and standards that encourage flexible load control at the device level,” as well as “an improved policy analysis paradigm that integrates the co-benefits of energy efficiency, DR, and other distributed energy resources.”

“As we get into a more and more carbon-free grid with more and more intermittent renewables on it, a viable and cost-effective way to help manage that is that loads are going to have to become more capable of following supply," CPower's Chamberlin said in a January interview. To get there, she said, “We’re going to have to find new ways to measure this, and we’re going to have to find ways that are acceptable to folks as being solid and reliable.”

A new way to measure disappearing load?

That the goal of Matt Golden, CEO of Recurve Energy. His company was a lead party in the stakeholder process that developed CalTrack, an open-source method that provides a transparent, reproducible and verifiable reckoning of normalized metered energy consumption. CalTrack is being used for performance-based energy efficiency programs in California, Oregon and New York.

These methods, when combined with the open-source OpenEEmeter — a reference implementation of CalTrack methods that are now part of the Linux Foundation Energy — puts real-world data to work to provide revenue-grade measurements of the effects of energy efficiency, demand response and demand-side flexibility.

This method of calculating a “counterfactual” — how much energy reduction can be credited to an intervention, based against a measure of what would have been used if that intervention had never taken place — can also be used in the realm of demand response, Golden said.

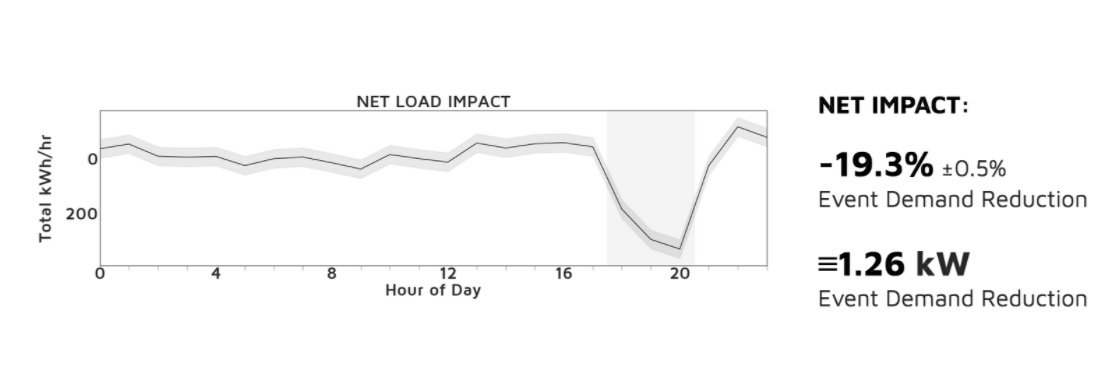

“We can differentiate between long-term avoided-cost values and resource adequacy, and [using] the same tool,” he said. In fact, Recurve applied this method to show that OhmConnect customers’ actions during the Aug. 14 and 15 heat waves did in fact lead to a significant reduction in energy compared to what would have otherwise happened, despite the CPUC's and CAISO’s baselining process saying otherwise.

To achieve that, Recurve used OpenEEmeter to analyze both OhmConnect’s customers and a comparable reference group of all similar customers normalized against hourly weather and occupancy patterns. That creates a forecasted baseline for how each building can be predictably expected to consume energy during a certain period of time — based not on guesswork but rather on a verifiable, step-by-step mathematical process.

“Then we use GridMeter,” a tool Recurve has developed as part of a DOE-funded project that creates a comparison group to measure against. “That allows us to get to the net impacts from the load that’s specifically isolated from OhmConnect’s intervention, with everything else taken out.”

The results, according to the analysis, are shown in the following chart.

This method avoids a key problem with baselining: the fact that how much energy a customer used in the past may not serve as a useful way to measure the effectiveness of what they’ve done during extraordinary events, like a record-setting heat wave.

“Instead of trying to use your own past data to predict an event that’s different than all your past data,” he said, “we have metrics about how everybody uses energy — the treated group and the general population — and we create a stratified sample, a pool of customers across all the metrics we have. [Then we] create a comparison group that’s 10,000 customers that are just like them” and adjust for every other difference between them.

That's known as correcting for “exogenous effects,” he said — the factors that can be differentiated from the actions taken by the customer in response to the demand response call. Those could include everything from changing use patterns due to COVID-19 stay-at-home restrictions, to the fact that CAISO issued a Flex Alert on the same day that led to many customers reducing their consumption.

And because every step in the process can be rerun and proven according to the CalTrack methodology, “it’s revenue-grade, which means it’s auditable, and it’s settlement quality — it’s the net of load shape absent exogenous factors. It’s just what OhmConnect did.”

This method does require massive computing power to process data from millions of smart meters, as well as application of recently developed “Energy Differential Privacy” methods to protect that customer data, he noted. “It was only about two years ago we were able to do that for the first time.”

But having that data analysis capability in hand opens up these methods for use in the real world. “Everything we’re doing is in the load impact protocols,” which allow comparison groups as a method for proving compliance. “It’s all accepted. They could analyze this and say, 'If it does make sense, we’ll allow it.'”

The next step could be piloting this approach as part of the emergency demand response program proposed by the CPUC in its November emergency order, Golden said. To be clear, this is just one of many proposals for reforming the state’s demand response regime.

Those include relatively moderate modifications to existing processes, such as increasing the weather adjustments to account for extraordinarily hot days or allowing participants to receive credit for load drops during weekends or outside defined hourly blocks. They also include proposals to increase budgets for existing programs such as DRAM and utility smart thermostat rebates.

Beyond quick fixes, California is undertaking a broad-ranging review of its resource adequacy construct to adapt to a grid that will need demand-side resources to more closely match the ups and downs of intermittent renewable energy. That will require both highly responsive loads that can respond to market dispatches — energy storage is a big category for further development — and approaches that use real-time pricing to achieve the load-shifting the state hopes to enlist to balance its grid. We’ll be getting into more detail on these next-step concepts in future GTM Squared coverage.