First we had distributed computing. Then we had distributed power generation.

AMD now wants to cross-breed these concepts in the hope of producing a fossil-free, distributed data center.

The company -- in conjunction with Clarkson University, Hewlett-Packard and the New York State Energy Research and Development Authority (NYSERDA) -- will conduct a series of experiments to see whether data centers can operate wholly or nearly exclusively on solar, wind and other renewable sources of energy.

Instead of building large, monolithic data centers covering tens of thousands of square feet, AMD will disperse modular computing pods filled with servers, networking boxes and storage systems across a geographic region. These pods will powered by renewable energy plants right next door.

If the wind is strong near a particular data center, intensive computing jobs can be shifted to it. When clouds pass over another, applications can be rolled to another location. To ensure greater power availability, many of the pods will be planted next to wind and solar assets.

“The idea is to shift the compute load from location to location based on the availability of [power] capacity,” said Steve Kester, director of government relations and regulatory affairs at AMD.

Besides curbing energy, this approach will cut infrastructure expenses. It only costs about $15,000 to lay a mile of fiber optic cable to connect a distributed data pod to another and only around $500 to activate a mile dormant or “dark” fiber, he said.

A mile of transmission line runs about $750,000 to $1 million, not including the hours of public hearings involved.

If it works -- and it’s still a big if -- chasing the weather like this could help put a dent in the ever-growing power diet of computers. Data centers consume around 2 percent of the electricity in the U.S., and in Northern California, the Potomac region and parts of New York, the figure is higher and climbing. Overall, that's not a lot of power, but to those that pay the bills it is becoming a headache. Power can account for around 30 percent of the operating expenses of web companies, second only to personnel.

Green IT has grown rapidly as a result. Some of the leading ideas for curbing power have been weather mapping inside of data centers (Sentilla, SynapSense), application shifting (PowerAssure), improved AC controls (Vigilent), switching from AC to DC power (ABB, GE, Nextek), swapping disks for flash memory (Sandforce), better power conversion (Transphorm), and smaller, more energy efficient servers and chips (SeaMicro, Calxeda).

Geography has been a favorite concept too, but mostly for curbing AC power consumption, not rolling applications to save power. Yahoo built a data center near Lake Erie so that it wouldn’t have to rely on mechanical air conditioning to cool its racks. In Norway and Finland, data centers are being located in mines and underground caves.

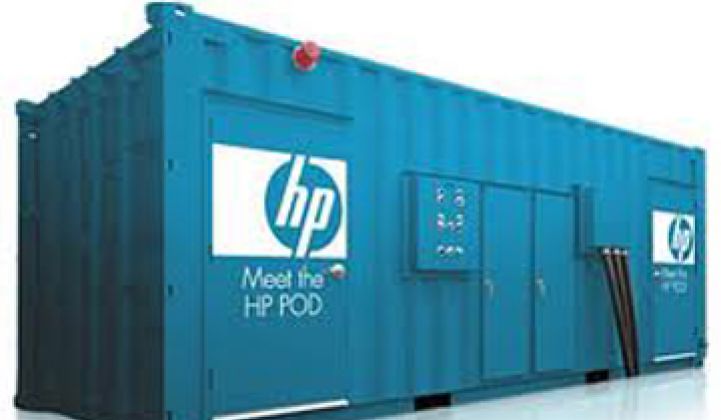

HP and Oracle/Sun have both promoted modular data centers as a way to curb energy and construction costs. An HP pod pretty much resembles a storage unit that fits on the back of a tractor-trailer. Both the size and mobility can ease cooling problems.

One of the biggest challenges will be latency. Can a data center manager shift an application from one pod back to another without slowing down performance? Will users experience stalls and hiccups? PowerAssure rolls applications too, but it’s not chasing ever-changing weather patterns and power loads.

A factor that may ameliorate the latency problem is the nature of computing today. Data centers used to tackle complex problems like financial simulations. Now, most servers spend their days coughing up the same, static “About Us,” “Get our iPhone App,” or “You Certify That You Are 18 Years or Older” web pages over and over and over. Caching and other strategies potentially can mask transfer issues.

The planning and testing phase of the project will last 12 to 18 months. If all works well, AMD and its partners will move to small-scale deployments.