Computers are both a good news and bad news story when it comes to sustainability and energy.

First, the good news. Computers are a boon to the environment. Rack-mounted servers consume about 2.5 percent of all of the electricity in the U.S., but consider the myriad of services -- email, Twitter, online shopping, banking -- you get from them. Lighting consumes 22 percent, but you could shut off the lights in your office now and probably continue to do your job.

Teleconferencing systems pay for themselves in a year in avoided airplane tickets.

Now, the bad news. Computers generate waste heat like saunas. Air conditioning can gobble up half or more of the power in data centers. Most of the time computers are in idle mode, but consume about 85 percent of the power that they would while active. Worse, many large web companies and banks say that their utilities have capped their power consumption: without more efficient equipment, their businesses can’t grow, or grow easily.

Luckily, many startups and established companies are rushing to the fore, making this perhaps the biggest opportunity out there. Here are the main subsegments:

Architecture and Design. Take advantage of the elements. Local Host in Norway hopes to build data centers in abandoned mine shafts, while test and measurement expert BaseN built one in a seaside underground bomb shelter in Helsinki. Yahoo’s data center near Buffalo needs no mechanical cooling.

Power Loft Services, meanwhile, has come up with a two-story building design that separates cooling equipment from computers. Ideally, this makes cooling less site-specific than the above examples. A 225,000-square-foot data center in summery Virginia designed by Power Loft sports a PUE of 1.28.

Others like Oracle-Sun and PDI have devised modular data centers out of 40-foot truck containers. The containers help isolate hot air more effectively.

Cooling. While ambient air is a favorite, other techniques exist, too. Facebook, through its recent Open Compute Project, designed and now uses taller-than-normal servers to channel air better. The server design, and the design of its datacenter, cut power by 38 percent: both are available to you via an open source license. Many companies have also opted for slower fans with big blades to cut cooling costs.

IBM has continued to pursue liquid cooling of chips and servers. An experimental datacenter in Syracuse is the latest example. (The Syracuse one also takes advantage of waste heat.) While liquid cooling sounds interesting, it’s an idea that comes and goes: many supercomputers in the 1950s had it.

DC Power. In the usual situation, high voltage AC power from the grid might be converted to DC and back again and stepped down in voltage five times before it gets used inside a computer. Each conversion results in power losses.

Converting AC to DC power just once at the gateway can reduce power consumption by 10 percent over a state-of-the-art AC datacenter and more than 20 percent over a conventional one. Additionally, DC equipment costs around 30 percent to 35 percent less to buy and install and requires less air conditioning.

But the biggest savings come in floor space. A DC datacenter requires 25 percent to 40 percent less square footage than its AC counterparts, largely because computer equipment can connect directly to backup batteries. Rooms full of uninterruptable power supplies (UPS) and other equipment aren’t needed.

Companies such as JPMorgan, Sprint, Boeing, and Bank of America have built DC datacenters already. SAP told us its DC datacenter saves $24,000 a year.

Validus DC Systems and Nextek Power Systems have created systems that convert high voltage AC and distribute DC through racks in big buildings. General Electric recently bought Lineage Power Holdings, which makes similar equipment, for $520 million.

Conversely, Transphorm doesn’t tinker with the AC power structure. Instead, it has a gallium nitride power converter that is more efficient than silicon converters. Silicon AC-DC converters can be 90 percent or more efficient. Transphorm claims it can tackle 90 percent of that last 10 percent. It’s new -- others have tried to work with gallium nitride with mixed results -- and Transphorm will have to compete against behemoths like Texas Instruments, but it’s a compelling idea.

Monitoring and Management. Several startups are mining this area, each with a slight tweak on the same basic formula. Both SynapSense and Sentilla started out as tools to monitor the ambient environment -- temperature, pressure humidity -- inside datacenters and to provide recommendations for optimizing cooling and operations. Now both are moving toward automated, dynamic control.

Sentilla earlier this year moved toward monitoring and managing computers and applications. It’s a big shift. Data like this can also help companies determine whether they should outsource applications or keep them in-house. After 45 days with the new tool, one Sentilla customer found a way to shed 18 percent of its load.

Power Assure, meanwhile, started in that realm. It analyzes server loads to optimize use. Many large companies virtualize servers to maximize computing cycles, but sparingly. Too much virtualization can leave you vulnerable to traffic spikes. Power Assure next wants to move into controlling storage devices.

Another twist on the model: Racktivity has created a power delivery unit (a piece of hardware in a rack) that is coupled with management and monitoring tools similar to those from Power Assure and Sentilla. By combining hardware and software, Racktivity says it can give customers a better view into power consumption and reduce overall costs.

On the desktop side, Verdiem has tools that control sleep modes on desktops from a central location. It claims it can save large businesses $30 to $60 a year per PC. We’ve been waiting for Cisco or Microsoft to buy them.

Servers and processors. SeaMicro has created a server composed of 512 or 256 Intel Atom chips. You wouldn’t use this server to render a 3D movie, but it works well for popping up static web pages. The company’s server consumes one-fourth of the power of conventional servers, takes up one-fourth the space, and it can be more reliable, because 90 percent of the components have been eliminated.

The secret sauce is a networking chip that optimizes computing loads.

Calxeda, meanwhile is designing as server chip based around an energy efficient processor core from ARM. Nvidia, Freescale, Marvell and others are doing the same. Microsoft has said it will support ARM in the next version of Windows, so the concept of an ARM server is more real than in the past. An ARM-based server also provides a function: lower energy consumption. The other non-Intel efforts Microsoft backed in the past -- MIPS chips, Alpha -- just provided an alternative manufacturer. Thus, this one might have legs.

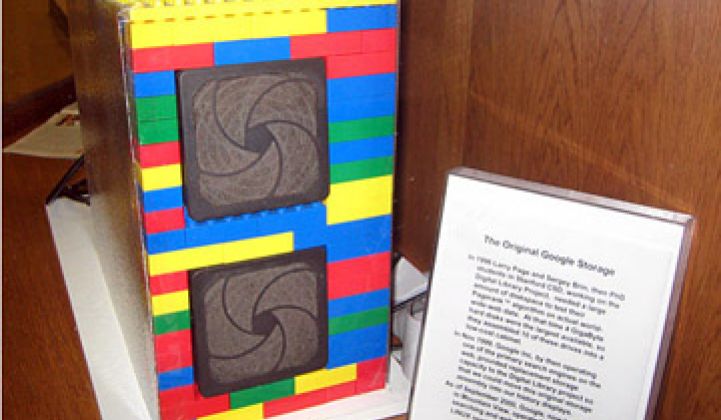

Storage and Memory. Advocates have said for the last six years that flash memory chips would soon start to replace spinning hard drives in notebooks, servers and datacenters. It’s still a work in progress, thanks to the relentless efforts by the hard drive industry to improve their products and some of the inherent technological issues with flash. (PS: that picture, which I took while at CNET, is the first Google storage device ever.)

The picture, though, is changing. Sandforce has come up with a controller that extends the life of flash memory chips. IBM is working with the company. Then there are outfits like Fusion I/O that have improved the overall functionality and performance of flash modules.

Other ideas -- phase change memory, racetrack memory, spintronics, spin/transfer torque, carbon nanotubes -- exist, but don’t hold your breath for them to go mainstream soon.

Lights and Sensors. Redwood Systems has created an LED lighting system for commercial buildings that makes it easy to dynamically dim lights as well as to monitor motion or carbon monoxide sensors. It also reduces natural resources: rather than depend on copper wire, Redwood can link lights and nodes through a communication cable that looks and acts a lot like Ethernet.

While Redwood’s system can be used in any sort of office space, many customers are buying it for datacenters first, and for good reason. Datacenter space can cost $100 a square foot to manage. Regular office space might cost only $25.

Nexttek is in this market too. The Emerge Alliance -- which includes Nextek, Johnson Controls, Armstrong Industries and others -- has come up with a way to deliver DC power to LEDs (which inherently run on DC power) over the metal frame of the building. Wires, in other words, can be eliminated. A trial can already be seen at the Whole Foods in Berkeley.