Open source software -- code that’s free for anyone to use, as long as they share what they’re doing with it -- plays a small, but growing, role in the smart grid. Examples include OpenADR, a Berkeley Labs-California Energy Commission-backed standard for automating demand response, and OpenPDC, a Tennessee Valley Authority’s Hadoop-based data management tool for transmission grid synchrophasor data.

Of course, the open-source software developed by Department of Energy labs, federal power authorities like TVA, and other public entities tends to start out as a way to model experiments, store data or other such research functions. Translating that capability into systems that are robust, interoperable and secure enough to be put to use on real-world grids takes a lot of extra effort.

At DistribuTECH last week, big Midwest utility AEP and Battelle, the Columbus, Ohio-based group that helps run $6.5 billion in annual research and development budgets for customers including DOE’s Pacific Northwest National Laboratory (PNNL), unveiled their latest take on turning open-source code to grid use. The tool is called Grid Command, and it’s an interesting mashup of some open-source code from PNNL, called GridLAB-D, and a lot of new data and functionality built to suit AEP’s needs.

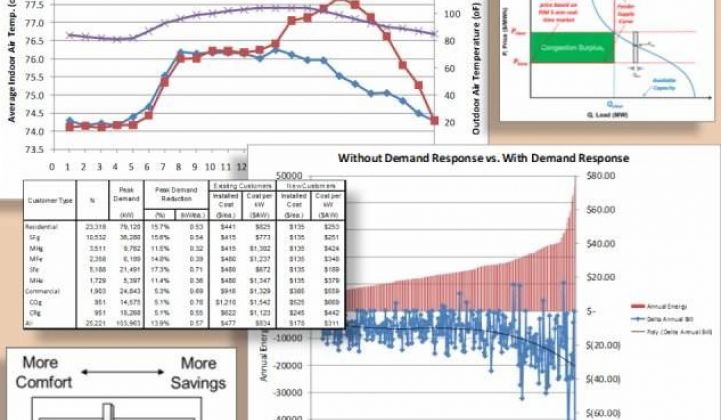

It’s all part of AEP Ohio’s gridSMART demonstration project, which won a $75 million DOE stimulus grant in 2009 to test out more than a dozen different smart grid technologies, including distribution management, volt/VAR control, advanced metering, home area networks and community energy storage. The goal is to cut demand by 15 megawatts and save customers more than $5 million over the lifetime of the project.

Battelle’s role was to figure out how all these technologies might affect the distribution grid as they scaled up, Jason Black, grid systems research leader at Battelle, said in an interview last week. Besides the not-so-simple act of actually measuring and reporting back on each system’s performance, AEP and DOE wanted to better predict how its power quality -- and cost -- variables would change as a result of changing levels of penetration of new technology.

From Science Kit to Utility Platform

To start figuring that out, Battelle turned to GridLAB-D, a software tool that has been in development at PNNL since 2007 for use in solving some of the complex math problems that go into modeling real power flows (including active and reactive power) on the grid. While other Battelle projects, such as its partnership with Gridquant, focus on the transmission side, GridLAB-D focuses on the distribution grid that delivers stepped-down power to end users.

Because it’s a tool developed by scientists, it has a lot of powerful capabilities, Black said. But it also lacked a user-friendly interface, both for simply inputting the data needed to make such complex grid modeling calculations and for returning results, he said. Grid Command represents the solution to those problems, developed for AEP and now available at large.

“When we started the project, AEP was spending four to five days to build a full-scale model of one of their distribution circuits,” Ivan Tornes, the principal research scientist at Battelle who led the software team that built it, told me last week. Once Battelle had built the software to import all the data needed automatically, “We took that process to about 30 seconds,” he said.

Grid Command also has a new GIS interface, with maps that users can spin, zoom, pan and otherwise manipulate, as well as click on to get more info about each component system, he said. On the post-processing side, Battelle has built tools to translate GridLAB-D formatted data into simple, double-click openable data files and the like, and has also automated the most common functions project managers need for calculating the results they’re expected to turn over to DOE eventually, he said.

In other words, Battelle has taken a scientists’ tool and turned it into a modeling, forecasting and optimizing utility product. It’s important to note that Grid Command does not, as yet, include direct control capabilities -- a key difference from the distribution grid management software built by grid giants like Siemens, General Electric, ABB, Alstom and Schneider Electric or IT giants like IBM and Oracle.

Big Data Driving Open-Source Into the Grid

It’s hard to see open-source software taking off in the utility or industrial controls sector, of course. But certainly we’ve seen a surge in the use of open-source tools for managing the grid’s big data needs, which can include the complex modeling needed to predict the impact of intermittent wind and solar power, plug-in vehicles and other such disruptive technologies.

“We’re starting to understand some of the interactions,” Black said. “Say if you put volt/VAR on a circuit with advanced demand response and battery storage -- are those complementary, or are they going to cancel out the benefits of one another?” Battelle and AEP are busy testing out thousands of scenarios like this, he said, and expect to have a report out in mid-2013 on the subject.

Speaking of data, one of the interesting perks of using GridLAB-D is the wealth of home energy modeling data it brings, Tornes said. PNNL has been collecting home energy data for grid purposes for more than a decade now, and led the GridWise Alliance project, one of the first large-scale tests of controlling home appliances and air conditioners to meet grid demands. GridLAB-D takes all that data and includes it in its models, he said -- an important factor, given that we’re talking about distribution grids, where each end user is an integral part of the equation.

GridLAB-D’s market module can also account for the impact of smart grid functions on end users, Black said. That’s important for calculating things like how many minutes a utility can turn down lots of home electric water heaters before people start to complain of cold showers, for example. In the longer view, AEP wants to use the tool to figure out how different pricing schemes or tariffs will change energy use patterns over time, he said.

That aspect of the gridSMART project ties it in with another, larger DOE smart grid project in the Pacific Northwest, featuring such partners as IBM, 3TIER, QualityLogic, Alstom Grid and Netezza, as well as Battelle, in an attempt to measure the value of a huge array of grid assets based on real-time grid data. The idea is to build a “transactive control” system across five states that will better align energy prices to real-world grid needs -- a data problem that’s enormous in its challenges of scope and speed.

There’s a huge demand for technology to make sense of the proverbial “data tsunami” utilities are facing with the advent of the smart grid. It’s in this realm where open-source tools like Hadoop, MapReduce and others will likely find their first home in the smart grid. Futuristic transactive control schemes are far in the future, though microgrids -- islands of self-managed power that can detach from or support the grid at large -- could serve as an early model of how new technologies can find value in playing grid energy markets.

But while utilities are busy handling their in-house grid data management problems, a whole new world of data about their customers awaits integration into their master plans. Technologies like OpenADR or the Green Button initiative for standardized consumer energy data are making it possible for third parties to collect and manipulate data for all kinds of purposes.

That’s another area where open-source approaches could deliver value -- and where consumer-facing smart grid specialists like Opower are investing a lot of time and money. The idea of a grid modeling tool that also knows how its customers are likely to react to it makes GridLAB-D a particularly interesting platform on that front.