The deployment of distributed solar has the potential to force drastic changes to the electric utility industry. Changes in rate structures, government and utility incentives, customer loyalty, consumerization of supply, and consumption of power are being discussed in a variety of states with growing rates of solar penetration, such as Arizona and Hawaii.

These large, business-defining discussions steal the spotlight in the news and at industry conferences, often pushing the more technical questions and obstacles to utility engineers to figure out. GTM recently launched the Grid Edge Executive Council to tackle these issues and more.

Traditional planning and engineering analysis

Targeting feeder improvements as a means of expanding capacity for distributed solar in a cost-effective and technically sound manner is a labor- and cost-intensive process. Traditional engineering analysis lacks the kind of robust modeling of distribution laterals and secondary distribution necessary to understand the effects of customer-connected generation. Instead, most utilities model feeders beyond the distribution substation as a singular fixed load source or series of load sources with little or no dynamic nature.

Historically, planning and modeling of system power flow models aggregated the expected or measured load at the primary level, only separating load sources when equipment such as reclosers, capacitors, voltage regulators, or automated switches were added to primary distribution.

Such systems would be used to model worst-case scenarios under peak loading, zero load, fault conditions, and other factors. Engineers use software to run scores of static safety and stability scenarios to test various equipment configurations, select equipment, and determine the effect of various shifts in load patterns or construction. This process of scenario testing has traditionally been performed before construction, during periodic feeder upgrades, and when extensions or new construction adds to the feeder’s load.

Using modern distribution management system power flow models, loads can be broken out by distribution transformer. This has extended engineering and simulation analysis software visibility to the secondary transformer. To simulate the secondary, utilities continue to apply static rule-of-thumb voltage drops that provide little insight into loading conditions or solar feed-in to a secondary bus.

When new generation is added to the transmission and sub-transmission grid, more diligent engineering analysis studies are undertaken. For large solar arrays (those <300 kW), utilities assess whether a distribution or transmission line can handle the new generation by running specialized interconnection studies, which often cost more than $10,000.

These studies require the services of specialized utility engineers or outside consultants to collect both paper and digital records for the feeder and local substation, evaluate completeness, gather additional information, and improve the model, as well as to provide scenario and/or simulation testing on the effect of connecting the array on a feeder. Finally, these experts test and select potential upgrades, such as mitigation equipment (such as static VAR compensators or battery storage systems) or traditional feeder upgrades (such as reconductoring or installing a larger secondary transformer).

What makes distributed solar different?

Due to their size, distributed solar plants do not require (and indeed, cannot economically pay for) this level of detailed study. To date, utilities have typically created conservative penetration limits for small-scale solar systems on a feeder. The intermittent nature, clustered deployment, and uncertain future of customer adoption of these systems creates dynamic conditions over the course of seconds, minutes, days, seasons, and years. Scenario-based studies that are periodically performed over the many decades that feeders remain in service are not capable of cost-effectively modeling the effect of each installation of an additional solar plant on a feeder.

What utilities need to do

In order to effectively customize the process of analysis for smaller systems, components such as engineering analysis software, simulation software, and the systems and foundational data that inform them, must be improved.

Utility modeling and simulation software has to move down to the level of the customer premise, providing utilities with the ability to cluster solar or other resources on a secondary bus to determine the effect of these resources on the customer’s power quality. This effect will have to be modeled not only in static scenarios, but also in dynamic time-series simulations that have adjustable units of analysis that can potentially evaluate the status of the system during every second or even sub-second periods during a full year. Historical data SCADA, weather data, and interval data from AMI could be useful in this process to increase the accuracy of these simulations.

An accurate view of the as-built distribution system is absolutely necessary to effectively model the interactions of many types of equipment together on a feeder. This requirement for clean data is potentially the most difficult, time-consuming, costly, and frustrating to fulfill. This process involves improving data capture and integrity in planning and GIS systems and detailed, continuous updating and review of GIS data to ensure the as-built environment is correctly represented in simulation software.

Lastly, the basic models for various dynamic equipment (such as smart inverters, battery storage, grid power electronics, etc.) have to continue to evolve. Today, most of these models lack the degree of accuracy necessary to simulate the effects of placing multiple devices on a single feeder. Efforts to improve these models continue in the realm of open-source analysis and simulation solutions such as OpenDSS and GridLAB-D.

If adopted, these improvements will allow the use of what-if simulations that examine the effects of additional deployment of a variety of traditional or intelligent equipment.

Utilities are on the forefront

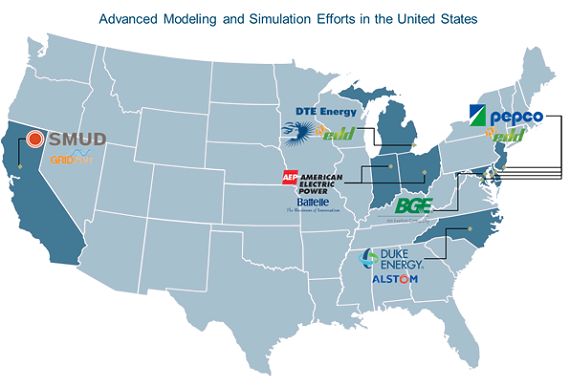

Select utilities around the country are beginning to integrate more advanced analysis and simulation software through efforts to improve open source tools such as GridLAB-D and OpenDSS. American Electric Power is working with Battelle to enhance the modeling of dynamic resources such as solar and closed-loop conservation voltage reduction on distribution feeders using GridLAB-D as the foundational software.

Source: GTM Research

Other notable efforts are underway with simulation and power flow modeling vendors to develop new and more effective simulation packages for designers and distribution engineers. In North Carolina, the proliferation of solar has pushed Duke Energy Carolinas to make use of the distribution simulator within its distribution management system to perform what-if simulations. This system has been used to model the short- and long-term effects of various solar adoption scenarios on the stability of distribution feeders, as well as on Duke’s North Carolina territory. Sacramento Municipal Utility District and Pepco have taken a different approach, using data from a variety of sources to build and test fast-power-flow engines that simulate the conditions encountered when new renewable generation is added to the grid.

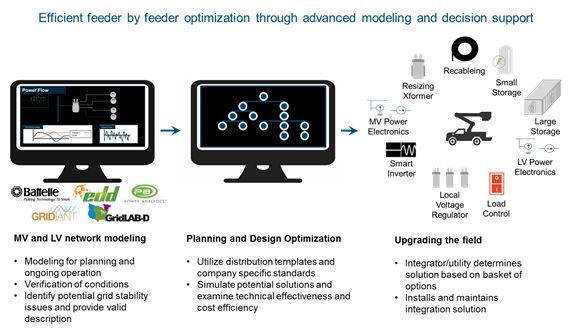

These projects, coupled with others at Baltimore Gas and Electric and Detroit Edison, are taking the first steps toward embracing distributed energy resources. Such systems perform MV and LV network modeling to enable improved new and existing planning and design of distribution systems.

A vision of the future

As these systems and their underlying models improve and deepen, regulated utilities are beginning to provide customized generation zoning services for customers. With this service, utilities have an inherent advantage due to their knowledge of their own distribution grid and strengths in safety. These systems could also permit utilities to better identify and understand the effect of equipment or system changes that can be made to increase the carrying capacity on distribution feeders. This will not only empower customers looking to install distributed energy resources and ensure system stability, but will also provide utilities with an effective means of quickly adjusting to changing legislative and regulatory policies that seek to accurately value customer-sited resources.

Source: GTM Research

***

Ben Kellison is a Senior Grid Analyst at GTM Research. For more information on next-generation utility services, business models and distributed renewable integration, join Ben and the rest of GTM Research at Grid Edge Live on June 24-25.