What’s the secret to converting smart meters, solar panels, batteries, and grid assets into a field-distributed, self-managing, integrated data analytics machine?

Out at its Charlotte, N.C. smart grid test bed, massive U.S. utility Duke Energy has an experiment underway seeking an answer to that question, working with a host of top-line smart grid IT vendors, an unusual grid architecture centered around communications nodes scattered throughout the grid -- and a $35 piece of off-the-shelf computing hardware playing a critical role.

Raiford Smith, Duke’s director of smart grid emerging technology, laid out Duke’s work on this distributed-computing-on-the-grid experiment during a Tuesday session at Greentech Media’s Soft Grid 2013 conference in San Francisco (see video below). Here’s a breakdown of what could be one of the most important concepts in distributed grid operations and data analytics out there.

If that seems like a big claim, it is -- and Smith conceded as much on stage, noting that he’s seen a lot of doubts from smart grid vendors and utilities about it. Indeed, Duke is one of the only utilities in North America that’s chosen to add a key piece of equipment -- its smart grid communications nodes -- to every smart metering project it has rolled out to date to enable this concept.

So far, Duke has worked with nodes from Cisco, Alcatel-Lucent and Ambient Corp. and is using the latter company’s devices in its Charlotte project. While adding multi-communications nodes like these increases the total equipment and deployment costs, they also serve as the key exchange point for the distributed architecture Smith described.

“My boss looked at me and said, ‘That’s an over-engineered AMI solution,’” he noted. “I said, ‘That’s an underutilized asset.’ And we’re both right.” Simply running smart meter billing over a multi-communications-enabled network would be a waste of money -- but Duke isn’t just doing that, he said.

Instead, “We’re building out a tiered architecture of analytics and capabilities” using a set of nodes that, while fairly unimpressive as individual computing machines, yield quite a bit of heft in aggregate. The 3,000 nodes in Charlotte, for instance, comprise nearly the same amount of processing power and memory capability as Deep Blue, the IBM supercomputer that beat Garry Kasparov in a game of virtual chess in 1997, and the 150,000 nodes being deployed in Duke’s Cincinnati, Ohio territory add up to about half the computing power of IBM’s Watson supercomputer.

Of course, comparisons like these don’t get at the heart of the challenge Duke is taking on, which is to get this computing power working as a holistic IT asset. To do that will require that central piece of IT infrastructure to interoperate with the rest of the assets and data formats on the grid -- or, as he put it, “one communications node to create the 'internet of things' for the utility.”

Building the Distributed Grid Computing Platform From the Node Up

Duke’s Charlotte test bed, known as McAlpine for the main substation it surrounds, consists of six distribution circuits, distribution automation and line sensor equipment, and about 14,000 customers connected via powerline-carrier-equipped smart meters, as well as Itron AMR meters. All of that gear is being connected with both 3G and LTE cellular backhaul networks, which are also supporting a range of in-home load monitoring systems, a set of solar PV arrays, and some grid batteries.

That’s a bewildering mix of future grid test cases to manage, but also a test of the capabilities of the distributed architecture Duke wants to prove out. In the case of this R&D project, Duke beefed up the capabilities of its Ambient comms nodes with a $35 piece of computing hardware beloved by hackathon participants and middle-school programming instructors everywhere: the Raspberry Pi.

Adding this credit-card-sized circuit board to its grid nodes allowed Duke to manage some of the more complex computing tasks it was seeking to accomplish out at the edges of the network, Smith said in an interview before his Tuesday Soft Grid presentation. While that, along with all the other parts of the Charlotte project, are still strictly in the R&D stage of development, it certainly indicates how the broader world of computing is interacting with the smart grid.

As for what Duke is asking of its distributed architecture, Smith described it as a combination of better performance, lower cost, and a set of future functions that combine both grid-side and consumer-side imperatives in a single platform. Beyond that, it could allow networked devices to react and adapt to disruptive influences like distributed generation, plug-in vehicles, energy-aware consumer demand management systems and the like, in ways that today’s centralized grid IT infrastructure would struggle to match.

Take a fairly typical grid event: a solar PV array experiences a drop in output as clouds go overhead and asks a nearby grid battery to discharge power to balance it out. A typical centrally managed utility system might see a smart meter detect that voltage drop, send that data via cellular communications back to the utility head-end, and then wait for corroborating data from other parts of the system to roll in before sending the battery its commands -- a process that can take about fifteen minutes, he said.

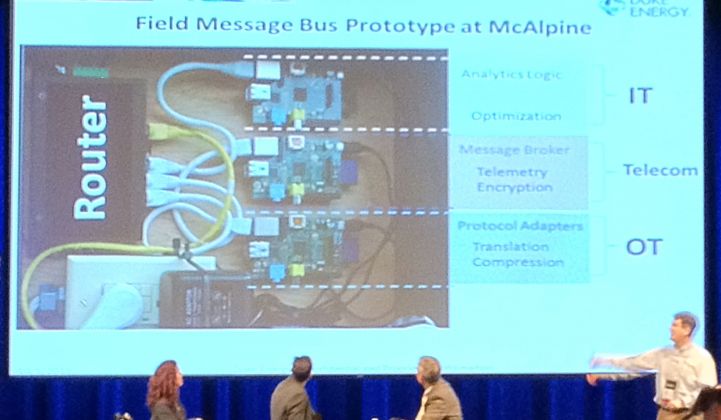

With Duke’s experiment, in contrast, “the line sensor sends its data to a communications node conveniently nearby,” which contains a message bus device -- the Raspberry Pi, in this instance -- that “acts as neutral broker of data sets, to allow applications to have access to it,” he said. From there, “That communications node chooses how to react for that battery, tells it what to do -- and then sends that information back to the head-end to tell it what happened,” he said.

Meanwhile, because this has all happened in the field, “We went from fifteen minutes to a quarter-second response time, with three different batteries, three different inverters, and two different metering types,” he said. With the Raspberry Pi device and additional integration cost, that adds up to a “total cost here of $50 to tie six vendors together.”

Duke has been using messaging protocols such as MQTT for lightweight, low-overhead applications and AMQP for more robust messaging traffic, he said. At the same time, it’s been busy translating industry-standard protocols such as DNP-3 for line sensors and capacitor banks, Modbus for smart meters and battery and solar inverters, and SNMP for remote transformer monitors.

Getting all its participating equipment vendors to turn these protocols into a set of mutually useful open standards wasn’t a simple task. “We created a coalition, and asked over 60 OEMs to join us. We got six,” he said.

Those vendors include Ambient, smart meter vendor Echelon, DMS provider Alstom, IT systems integrator Accenture, distribution automation and grid battery integration vendor S&C Electric and cellular provider Verizon, according to an abstract of the project that Duke plans to present at next year’s DistribuTECH conference. Smith said he's hoping that “other vendors will see the opportunities” of prepping their equipment to work with this open-source, distributed message bus architecture.

From Distributed Operations to Distributed Analytics

So far, we’ve been discussing purely operational aspects of Duke’s experimental architecture. But there are also big opportunities in using the same networked computing infrastructure for analytics, Smith said.

To get there, Duke first mapped its array of grid assets in terms of their data resources and availability, he said. Then it started collecting that data and merging it with existing telecommunications data, weather data, customer data and distributed energy resource data, validating it and normalizing it in a Hadoop-based data store.

From there, of course, Duke needed to bring all that data back to a platform that could present it in a single “pane of glass” for utility operators to see it and act upon it, he said. Duke has been working with three different vendors on that process, including SK Telecom subsidiary GridMaven, one of the new network management system offerings targeting the smart grid space (here's a PDF of a May article describing that partnership).

“The hardest part of all of this is how to pay for it,” he added. “One of the things we’re trying to show here is that these kinds of systems can be cost-effective” -- and, at least in this R&D stage, the applications Duke is looking into do appear to show some hefty cost savings.

Take one example Smith laid out: replacing expensive telephone line access points and T-1 lines that link some of its lower-voltage transmission switches with a wireless connection using its communications nodes. That simple move could cut the costs of connecting those devices by about 90 percent, he said. The same concept, applied to distribution automation deployments, could see similar cost reductions of 16 percent to 33 percent, he said.

Another example looks at a single substation with 30 voltage regulators, each connected to Duke’s SCADA system via cellular modems, at a total cost of $36,000. “What we did instead is put a Wi-Fi modem in each of those, and one communications node” to connect them, at a total cost of $2,500, he said.

Beyond these cost reductions, however, “with that, I’ve got an aggregation point for the data, and because it’s the equivalent of a smartphone, I can put apps on it,” he said. That could include compiling an aggregate view of all 30 voltage regulators into a single set of data points for reduced network traffic and integration complexity, which could then be more easily integrated into a smart-meter-based system for detecting outages “faster, more efficiently, and at lower cost,” he said.

“All of these things are made possible, first by the right communications node architecture,” he said. As for what other analytics “apps” that Duke may consider for this distributed architecture, “We’re not sure -- the purpose of the data collection and analytics work is to get those answers,” he said. “Maybe we could add customer analytics to the edge of the grid, and do things we’ve never thought of before.”

Watch the Soft Grid 2013 Utility Executive Forum: